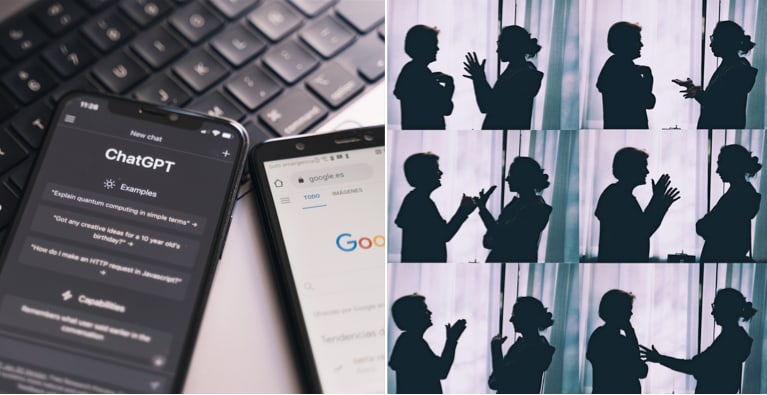

Nya AI-racet: Kan mindre modeller prata mänskligare?

Skulle AI-chattbotar som Chat GPT och Bard låta mer människolika i sitt språk om de kunde färre ord? Det är från den frågan som projektet BabyLM Challenge utgår, skriver New York Times.

En ung grupp akademiker har bett forskarteam att skapa fungerande språkmodeller baserade på mindre information än en tiotusendedel av de största modellerna. Tanken är att de ska bli mindre – men också mer tillgängliga.

– Vi utmanar människor att tänka smått och fokusera mer på att bygga effektiva system som fler personer kan använda, säger Johns Hopkins-forskaren Aaron Mueller som är en av organisatörerna.

bakgrund

Language model

Wikipedia (en)

A language model is a probability distribution over sequences of words. Given any sequence of words of length m, a language model assigns a probability

P

(

w

1

,

…

,

w

m

)

{\displaystyle P(w_{1},\ldots ,w_{m})}

to the whole sequence. Language models generate probabilities by training on text corpora in one or many languages. Given that languages can be used to express an infinite variety of valid sentences (the property of digital infinity), language modeling faces the problem of assigning non-zero probabilities to linguistically valid sequences that may never be encountered in the training data. Several modelling approaches have been designed to surmount this problem, such as applying the Markov assumption or using neural architectures such as recurrent neural networks or transformers.

Language models are useful for a variety of problems in computational linguistics; from initial applications in speech recognition to ensure nonsensical (i.e. low-probability) word sequences are not predicted, to wider use in machine translation (e.g. scoring candidate translations), natural language generation (generating more human-like text), part-of-speech tagging, parsing, optical character recognition, handwriting recognition, grammar induction, information retrieval, and other applications.

Language models are used in information retrieval in the query likelihood model. There, a separate language model is associated with each document in a collection. Documents are ranked based on the probability of the query

Q

{\displaystyle Q}

in the document's language model

M

d

{\displaystyle M_{d}}

:

P

(

Q

∣

M

d

)

{\displaystyle P(Q\mid M_{d})}

. Commonly, the unigram language model is used for this purpose.

Since 2018, large language models (LLMs) consisting of deep neural networks with billions of trainable parameters, trained on massive datasets of unlabelled text, have demonstrated impressive results on a wide variety of natural language processing tasks. This development has led to a shift in research focus toward the use of general-purpose LLMs.

Omni är politiskt obundna och oberoende. Vi strävar efter att ge fler perspektiv på nyheterna. Har du frågor eller synpunkter kring vår rapportering? Kontakta redaktionen